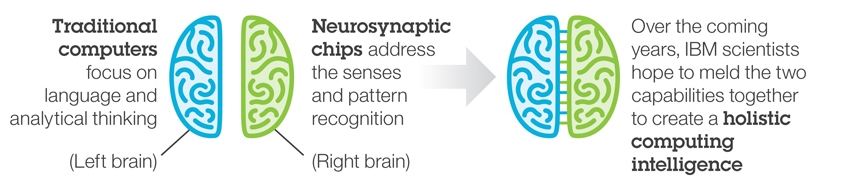

I recently attended a Deep Learning (DL) meetup hosted by Nervana Systems. Deep learning is essentially a technique that allows machines to interpret sensory data. DL attempts to classify unstructured data (e.g. images or speech) by mimicking the way the brain does so with the use of artificial neural networks (ANN).

I recently attended a Deep Learning (DL) meetup hosted by Nervana Systems. Deep learning is essentially a technique that allows machines to interpret sensory data. DL attempts to classify unstructured data (e.g. images or speech) by mimicking the way the brain does so with the use of artificial neural networks (ANN).

A more formal definition of deep learning is:

DL is a branch of machine learning based on a set of algorithms that attempt to model high-level abstractions in data by using multiple processing layers with complex structures,

I like the description from Watson Adds Deep Learning to Its Repertoire:

Deep learning involves training a computer to recognize often complex and abstract patterns by feeding large amounts of data through successive networks of artificial neurons, and refining the way those networks respond to the input.

This article also presents some of the DL challenges and the importance of its integration with other AI technologies.

From a programming perspective constructing, training, and testing DL systems starts with assembling ANN layers.

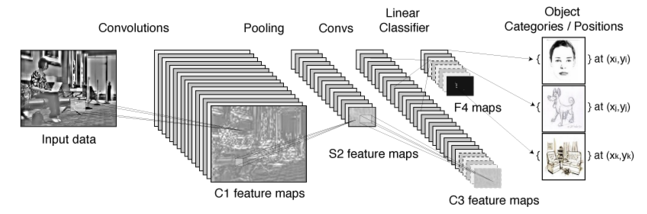

For example, categorization of images is typically done with Convolution Neural Networks (CNNs, see Introduction to Convolution Neural Networks). The general approach is shown here:

Construction of a similar network using the neon framework looks something like this:

|

1 2 3 4 5 6 |

layers = [Conv((5, 5, 16), init=init_uni, activation=Rectlin(), batch_norm=True), Pooling((2, 2)), Conv((5, 5, 32), init=init_uni, activation=Rectlin(), batch_norm=True), Pooling((2, 2)), Affine(nout=500, init=init_uni, activation=Rectlin(), batch_norm=True), Affine(nout=10, init=init_uni, activation=Softmax())] |

Properly training an ANN involves processing very large quantities of data. Because of this, most frameworks (see below) utilize GPU hardware acceleration. Most use the NVIDIA CUDA Toolkit.

Each application of DL (e.g. image classification, speech recognition, video parsing, big data, etc.) have their own idiosyncrasies that are the subject of extensive research at many universities. And of course large companies are leveraging machine intelligence for commercial purposes (Siri, Cortana, self-driving cars).

Popular DL/ANN frameworks include:

- neon | Python-based deep learning library (Nervana)

- Caffe | Deep Learning Framework (Berkeley)

- Torch | Scientific computing for LuaJIT (Facebook/Twitter/Google collaboration)

- TensorFlow | An Open Source Software Library for Machine Intelligence (Google)

Many good DL resources are available at: Deep Learning.

Here's a good introduction: Deep Learning: An MIT Press book in preparation